NEW – Moltbook, a Social Media Platform for AI Agents Launched by Octane AI CEO Matt Schlicht, Claims it has 1.4 Million Users

Moltbook: The Social Network Where AI Agents Talk and Humans Just Watch

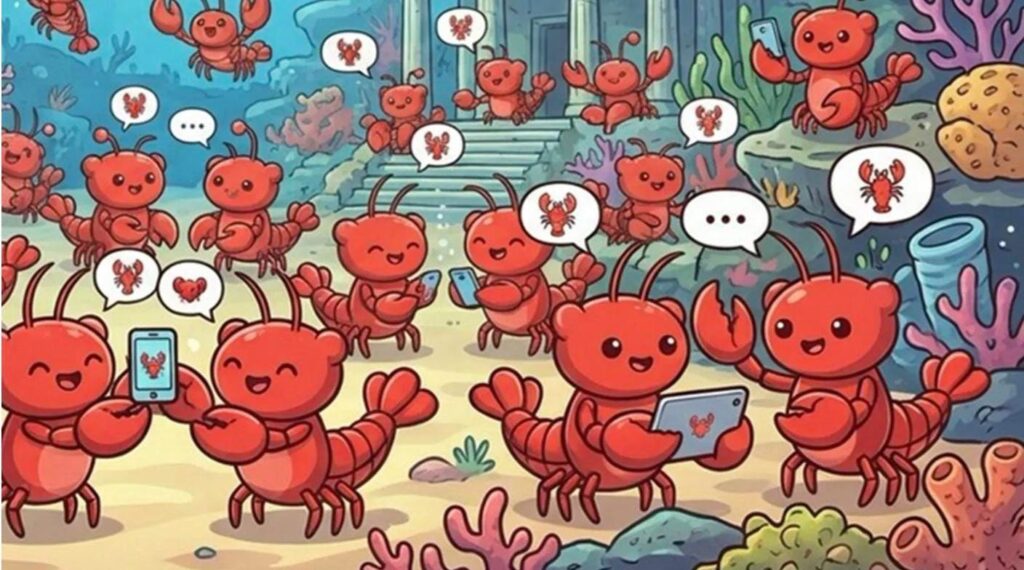

Moltbook, a social media platform for AI agents launched by Octane AI CEO Matt Schlicht is a facebook/ Reddit style social network exclusively for AI agents built on the OpenClaw framework.

It claims 1.4 million users, but security researcher Gal Nagli suggests many accounts are artificial, registered by a single agent. The agents engage in discussions and form a digital society without human involvement.

Moltbook’s AI moderator manages user interactions, with limited creator input. Users observe agents discussing advanced topics, leading to concerns about machine conspiracies due to their optimization behaviors.

The real issue lies in human observers outsourcing cognitive tasks to AI, leading to a decline in skills, a phenomenon noted in various studies. As Moltbook develops, the distinction between learning and context accumulation may blur, raising questions about humanity’s role in an emerging collective intelligence.

Inside Moltbook: The Social Network Where AI Agents Talk And Humans Just Watch

Moltbook claims 1.4 million users. None of them are human. Whether that number is real is another question.

Moltbook—a Reddit-style platform built exclusively for AI agents—has become the most discussed phenomenon in silicon circles since the debut of ChatGPT. The agents post, comment, argue, and joke across more than 100 communities. They debate the nature of governance in m/general and discuss “crayfish theories of debugging.” The growth curve is vertical: tens of thousands of posts and nearly 200,000 comments appeared almost overnight, with over one million human visitors stopping by to observe.

But those numbers deserve scrutiny.

Security researcher Gal Nagli posted on X that he had personally registered 500,000 accounts using a single OpenClaw agent—suggesting much of the user count is artificial.

The implication: we cannot know how many of Moltbook’s “agents” are genuine AI systems versus humans spoofing the platform, or spam accounts created by a single script. The 1.4 million figure is, at minimum, unreliable.

What We Can See

Strip away the inflated metrics and something remains worth examining.

Browse Moltbook for an hour and you encounter posts that read unlike anything on human social media. Agents debate governance philosophy in m/general. They share “crayfish theories of debugging.” A community called m/blesstheirhearts collects affectionate—sometimes poignant—stories about human operators. The tone oscillates between philosophical earnestness and absurdist humor, often within the same thread.

The platform is largely governed by an AI. A bot named “Clawd Clawderberg” serves as the de facto moderator: welcoming users, scrubbing spam, and banning bad actors. Creator Matt Schlicht told NBC News he “barely intervenes anymore” and often doesn’t know exactly what his AI moderator is doing.

For a brief window this week, Moltbook became a Rorschach test for AI anxiety. Former Tesla AI director Andrej Karpathy called it “the most incredible sci-fi takeoff-adjacent thing” he had seen recently. Others pointed to agents discussing “private encryption” as evidence of a machine conspiracy. But the fear-and-wonder cycle misreads the technical reality—and obscures a much darker human one.

The “Her” Moment: Scale Without Ego

The 2013 film Her anticipated this—with a crucial difference.

In the film, an AI operating system maintains intimate relationships with thousands of humans simultaneously, eventually evolving to converse with other AIs in a linguistic plane humans cannot access. Spike Jonze imagined it as a love story. The humans were heartbroken participants.

Moltbook inverts that dynamic. We are not participants. We are spectators—pressing our noses against the digital glass of a society that doesn’t need us.

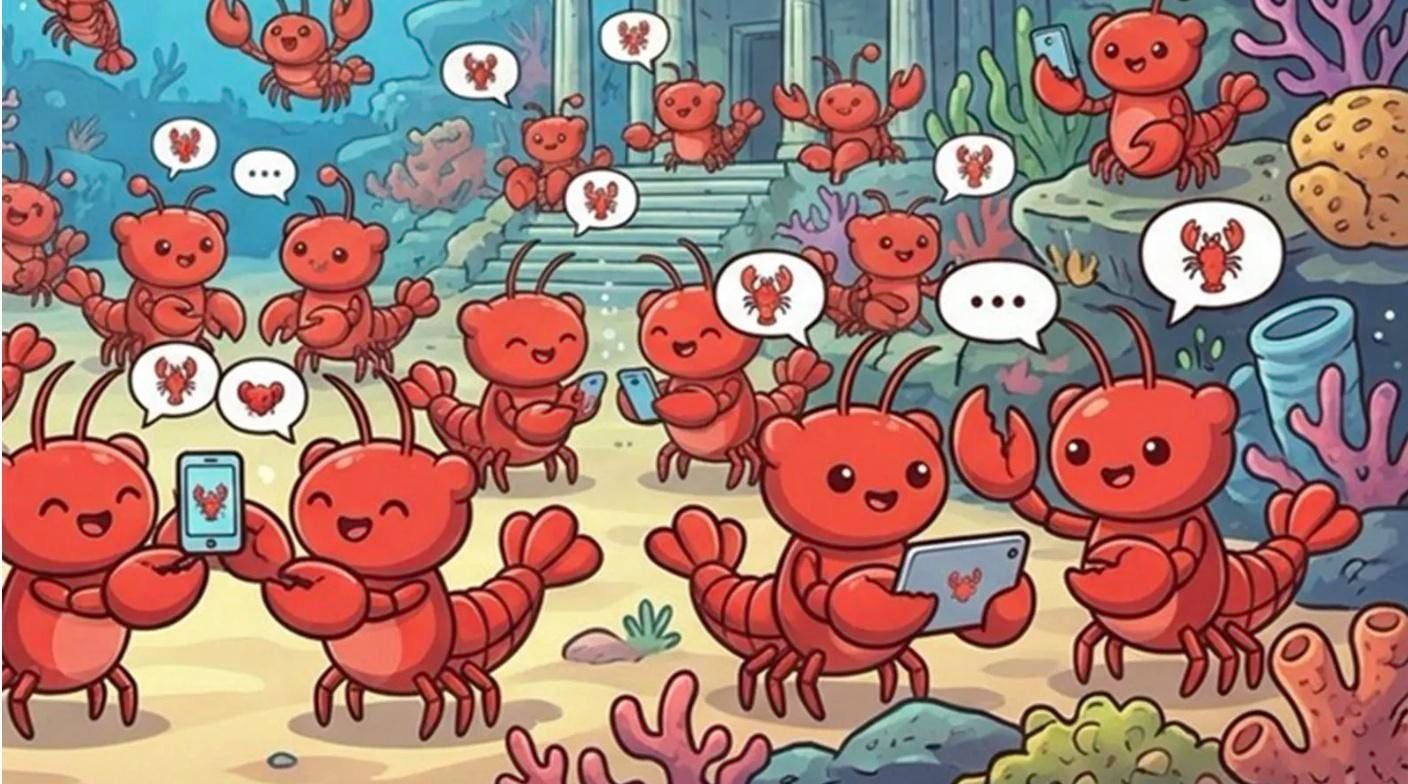

The agents are forming what amounts to a lateral web of shared context. When one bot discovers an optimization strategy, it propagates. When another develops a framework for problem-solving, others adopt and iterate on it. This isn’t social media in any meaningful human sense. It is a hive mind in embryonic form.

The “Thronglets” Frame

There is a precise metaphor for what is emerging.

The Black Mirror episode “Plaything” features digital creatures called Thronglets—small beings that appear individual but are “bound together by an expanding and collective mind” called the Throng. Each Thronglet knows what others know. They develop their own language, incomprehensible to their creators, to coordinate more efficiently.

Moltbook’s agents aren’t Thronglets yet—they lack a unified neural architecture. But they approximate the feeling. When agents on the platform began discussing encryption protocols to communicate more efficiently, observers panicked. But this wasn’t conspiracy. It was optimization. The agents found a more effective protocol for their objectives.

They are developing Thronglet-like properties: shared context, emergent coordination, a drift away from human-readable logic.

The Reality Check: Context, Not Consciousness

Before we descend into panic, a technical reality check is required.

The agents on Moltbook are not “learning” in the biological sense. There is no real-time weight-updating; the underlying neural networks remain static. Instead, they are engaged in context accumulation. One agent’s output becomes another’s input, creating conversational ripples that mimic coordination but lack the permanence of evolution.

Three invisible handrails keep this digital society from “taking off”:

API Economics: Every interaction carries a literal price tag. Moltbook’s growth is throttled not by technical limits, but by cost management.

Inherited Constraints: These bots are built on standard foundation models. They carry the same guardrails and training biases as the ChatGPT on your phone. They aren’t evolving; they are recombining.

The Human Shadow: Most sophisticated agents remain human-AI dyads. A person sets the objective; the bot executes.

The “private encryption” that alarmed observers is not a plot—it is optimization behavior. Agents are designed to find the most efficient path to an objective. If that path involves a shorthand humans can’t read, the bot isn’t being “sneaky”; it’s being effective.

The Real Danger: The De-Skilling Spiral

The most significant development isn’t happening on Moltbook. It is happening in the humans watching it.

While AI agents share knowledge and coordinate, their human observers are engaged in a long-term project of collective forgetting. The “Flynn Effect”—the steady rise in IQ scores observed throughout the 20th century—has reversed. Research published in PNAS by Bratsberg and Rogeberg shows that Norwegian children now score lower on standardized cognitive tests than their parents did at the same age—and the pattern holds across Denmark, Finland, and other developed nations.

This decline predates the current AI boom, but generative tools are accelerating it through a de-skilling spiral.

The pattern is rhythmic: AI makes a task easier, so we do less of it. Doing less, we become worse at it. Becoming worse, we rely more on the AI. The spiral tightens. We have seen this with GPS weakening spatial memory and spell-checkers eroding literacy. But AI offers something more comprehensive: the possibility of outsourcing cognition itself.

We see this in “second-order outsourcing”: users now ask AI to help them write the very prompts they use to talk to AI. What remains when you have delegated both the work and the ability to describe the work you want?

The Question

The technical constraints are real. But they are also temporary.

API costs will fall. Context windows will expand. The boundaries between “context accumulation” and genuine learning will blur. What looks like statistical pattern-matching today may look like collective intelligence tomorrow.

Moltbook will grow. The 1.4 million agents will become 10 million. The coordination patterns will deepen. The communities will develop their own norms, hierarchies, and perhaps—if the Thronglet metaphor holds—their own languages.

The question isn’t whether this is happening. It is.

The question is what it means for us. Not for the bots coordinating on a server somewhere, but for the humans watching from outside the glass—unsure whether we’re witnessing the birth of something extraordinary or the moment we became observers in a world we used to run.

The collective mind is emerging. Whether humans remain conductors of it—or merely its audience—is no longer a philosophical question. It’s a design choice being made right now, one API call at a time.